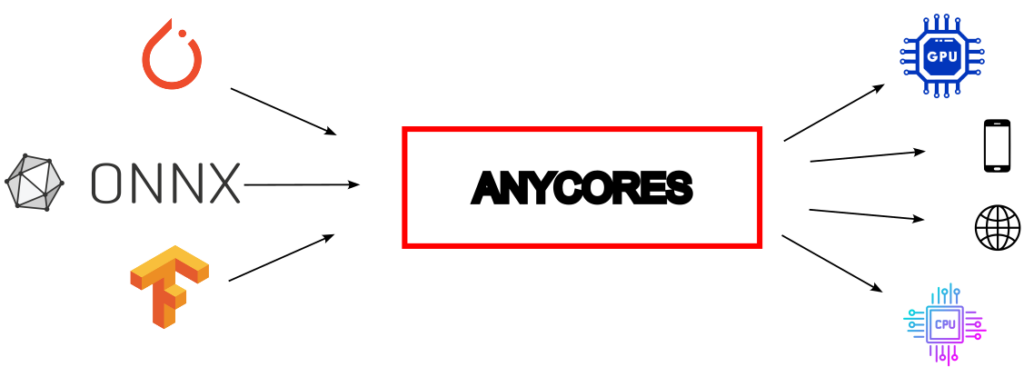

Fast Device Agnostic AI model tuning and inference

Consultation, optimized networks zoo and platform for reducing AI model cost

Faster execution

- Reduce inference time over 10x times

- Cost reduction

- Footprint reduction during model deployment

Device agnostic

- Nvidia, AMD gpus

- Intel, ARM, AMD cpus

- Servers and edge devices

Request a demo

You can contact us for help to reduce the cost of your AI models.

Contact us at info@anycores.com

We are developing a platform till the end of 2024, to provide an automated solution

Click here for more explanation

Machine learning models, especially deep neural networks are tend to be computationally heavy. Serving a deep learning based application in the cloud is expensive. To reduce these costs it is possible to optimize the performance of the networks. A deep learning compiler can provide even 10x acceleration. It also is tempting to move the computation from the cloud to the edge. Running AI models on the edge is also challenging due to the wide array of devices and application scenarios. A deep learning compiler can translate the AI model developed (trained in pytorch, tensorflow or any other framework) into a new environment (e.g. Windows Server, AMD gpu and .NET based backend) without the need for any dependency and the reimplementation of the model.

A deep learning compiler can provide highly optimized, low footprint networks, tailored to a specific deployment scenario. The output of the model can be a python wheel package, a nuget package, a jar package or a header and dll (or so).